If you're new here, you may want to subscribe to my RSS feed. Thanks for visiting!

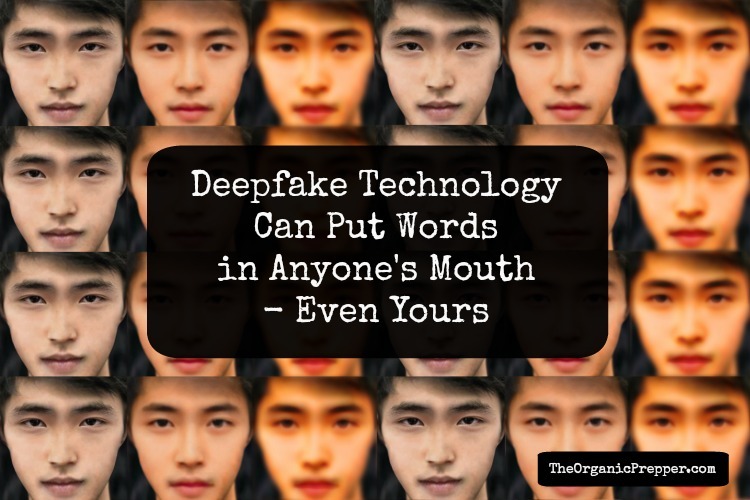

Did you know that technology exists that can create images of people that are not real?

And, did you know that technology exists that can make those “people” talk?

We’ve written about all sorts of dystopian technology on this website, but this might be the creepiest yet. Known as “deepfakes” (a portmanteau of “deep learning” and “fake”) this technology can be used for human image synthesis based on artificial intelligence. It is used to combine and superimpose existing images and videos onto source images or videos using a machine learning technique known as generative adversarial network (GAN).

Deepfake technology is rapidly evolving and the likely consequences are troubling.

Deepfake technology has already been used to create fake news, malicious hoaxes, fake celebrity pornographic videos, and revenge porn. We know how fast the internet can turn on a person from just a camera angle. Imagine the chilling possibilities with this kind of technology.

In a February 2019 report called ThisPersonDoesNotExist.com Uses AI to Generate Endless Fake Faces, James Vincent raises chilling concerns over the technology:

As we’ve seen in discussions about deepfakes (which use GANs to paste people’s faces onto target videos, often in order to create non-consensual pornography), the ability to manipulate and generate realistic imagery at scale is going to have a huge effect on how modern societies think about evidence and trust. Such software could also be extremely useful for creating political propaganda and influence campaigns.

In other words, ThisPersonDoesNotExist.com is just the polite introduction to this new technology. The rude awakening comes later. (source)

It looks like “later” is here:

A new algorithm allows video editors to modify talking head videos as if they were editing text – copying, pasting, or adding and deleting words.

A team of researchers from Stanford University, Max Planck Institute for Informatics, Princeton University and Adobe Research created such an algorithm for editing talking-head videos – videos showing speakers from the shoulders up. (source)

The possible consequences of this technology are horrifying to ponder, but Melissa Dykes of Truthstream Media did just that in this chilling video.

It is getting more and more difficult to discern fakes from reality.

“Our reality is increasingly being manipulated and mediated by technology, and I think we as a human race are really starting to feel it,” Dykes writes:

We are coming to a point in our post-post-modern society where seeing and hearing will not be believing. The old mantra will be rendered utterly worthless. The more we consider the sophistication of these technologies, the more it becomes clear that we are just scratching the surface at attempting to understand the real meaning of the phrase “post-truth world”. It’s not a coincidence this was an official topic at last year’s ultra-secretive elite Bilderberg meeting, regularly attended by major Silicon Valley players including Google and Microsoft, either. (source)

What do you think?

What will deepfake technology be used for? Do you think it will eventually become impossible to identify deepfakes? What will the consequences be? Please share your thoughts in the comments.

About the Author

Dagny Taggart is the pseudonym of an experienced journalist who needs to maintain anonymity to keep her job in the public eye. Dagny is non-partisan and aims to expose the half-truths, misrepresentations, and blatant lies of the MSM.

Likely uses for deepfake “evidence”:

Propaganda to sell “false flag” events to the public, to justify warfare intervention. Had Lyndon Johnson had such technology, he could have produced a video of the Gulf of Tonkin attack (that never happened) to sell the fraudulent Vietnam war to the Congress and to the American public. The equivalent today would be a fake video to sell Mike Pompeo’s unbelievable story of Iranians torpedoing that Japanese freighter in the Strait of Hormuz. (Crewmen reported seeing incoming “somethings” flying in above water just before the explosion; torpedoes move strictly underwater. Also Iranians were reported to be helping rescue some of the crew members.)

False “evidence” to trash the reputation and public image of political opponents — especially around election time. In the same disgusting spirit as the very recent uploading of child porn onto the Infowars server of Alex Jones, there are enough sociopaths among us to guarantee deepfakery for such repulsive purposes.

False “evidence” to sell a faked probable cause story to a judge to get a search warrant that should never have been issued.

False “evidence” to destroy the reputation and career of a hate-filled stalker’s target person.

Like all tools which can be used for good or bad purposes, “deepfakes” can be used for entertaining movies — or to destroy real people.

–Lewis

I think the basic motivation for researching and developing “deepfake” technology was linked to making the evil possible rather than the good.

Since 2016 election, I do not automatically believe anything on the internet or reported in the news. I give it a few hours or day to see if it gets debunked, proven false, or for the slant.

Unfortunately, people are too apt to just believe whatever, forward it, re-tweet it etc.

Then when it is proven false, no one forwards or re-tweets that.

I was in the truck on my way to town when I heard this on the radio: https://www.wbur.org/onpoint/2019/06/20/deepfakes-fake-news-videos-social-media

It is worth a listen.

Get informed.

I will listen to this as soon as possible. Thanks for that link.

And of course, it will be owned and used liberally by the Progressives.

According to the OnPoint article I posted above, it is a free-ware program anyone can download and use.

The guest/expert they had on, stated that previously it took a degree of skill and talent to do that kind of video production.

Now, with the self-learning AI, anyone can do it.

Scary.

I was waiting for this. There will be hologram projectors and hologram videos, that will display known people, or Authoritarian types that will give speeches on what they want the people to do, and how to act. Some people in the rural areas will believe that these are visions from heaven or similar mystic places, and they will believe that these holograms are real and they will follow the commands. These commands may involve some very criminal acts. A tribal group living in rural Amazon, rural Borneo or other such places may go for words and follow the commands. These holograms may be able to be projected from space, airplane, helicoptor, even from a hidden area near by. It can even be real time to seem like the tribal people are really talking with some authoratative being.

Eric

They will produce entirely faked news reports to justify martial law, war, every draconian act. And the sheeple will believe it happened. for example, there’s some really grainy footage of “iranian” sailors emplacing limpets on tanker in the Strait of Hormuz. I wonder how much it would cost dod/cia/mossad to fabricate some grainy footage of a small boatr with men inside it putting limpets on a larger vessel? I’m not saying that didn’t happen, because islam is dumb enouhg to do that as we see from bombings and attacks every day, juist that these events can and are fabricated. Look at all the “white helmet” fabrications in syria, and the world takes them seriously.